Artificial intelligence abilities are developing and advancing at a rapid pace. At the same time, businesses are experimenting with AI applications, often combining several abilities to accomplish complex tasks. These AI applications typically fall into these categories:

Cognitive Insight

Cognitive insights are essentially AI-enabled analytics. Like traditional analytics, they use algorithms to analyse data and interpret identified patterns. AI models can be more detailed and data-oriented than traditional analytics and are trained using large domain-specific datasets. Unlike traditional analytics, cognitive insight applications also use machine learning to discover non-intuitive patterns and can improve the accuracy of their predictions over time as they gain experience.

Insight marketing

Research shows that sales and marketing are two of the top priorities for companies investing in AI technologies: 64% of early AI adopters are already applying derived insights for these purposes. [1] One Dutch transportation company (that was already using advanced analytics) recently implemented a cognitive insight solution to improve lead generation. [2] With 70 subsidiaries, the company had access to a massive amount of data and wanted to ensure all its sales opportunities were being leveraged. By using cognitive insight tools to mine the unstructured data for pricing and market trends, the company was able to generate new leads and adjust current pricing to optimize revenue.

Insight-driven healthcare

Cognitive insights are applicable to nearly any data-driven business and are often used in combination with other AI applications. In the healthcare industry, cognitive insights are assisting doctors in diagnostic decision-making by evaluating medical data sets to predict probable diagnoses. In bioinformatics and the pharmaceutical industry, scientists are using cognitive insight software to identify patterns in large data sets, such as genomes (see Deep Genomics [3]). Deep-learning AI technologies, like Atomnet, [4] analyze the structure of proteins known to cause certain diseases and generate insights into targeted drug design. Insights like these are advancing medical research, treatment, and product design into a new era of insight-driven possibilities.

Cognitive vs. human

Historically, data curation has been a labour-intensive task. Reviewing and analyzing massive data sets, such as genomes, exceed the scope of human ability. Cognitive insights are an improvement to a task already better suited to machines than humans, augmenting human employees’ value rather than replacing it. By using cognitive insights, companies can save employees time, increase employee productivity, and improve the quality and efficiency of business processes. Unlike traditional analytics, the value produced by cognitive insights will only increase with time as the models learn to produce more accurate and valuable results.

Cognitive insights are also adaptable to multiple uses, including being used with AI-enabled engagement tools. New approaches large-volume data are also being developed using cognitive insights to generate value.

[1] https://www.ibm.com/watson/advantage-reports/market-report.html

[2] https://www.ibm.com/watson/advantage-reports/cognitive-business-lessons/customer-acquisition.html

[3] https://www.deepgenomics.com/

[4] Wallach, Izhar, Michael Dzamba, and Abraham Heifets. “AtomNet: A deep convolutional neural network for bioactivity prediction in structure-based drug discovery.” arXiv preprint arXiv:1510.02855 (2015).

Diagnostic Decision-making

Artificial intelligence is poised to bring major changes and advancements across many industries, one of the most promising being healthcare. AI tools, such as IBM’s Watson, combine AI’s deep learning, natural language processing (NLP), and machine vision abilities to provide advanced insights. These insights can assist doctors in diagnostic decision-making, changing the way diseases are identified and fought.

Watson: How AI can work alongside doctors

Since Watson debuted its skills on Jeopardy! in 2011, IBM has spent upwards of $4 billion buying companies that possess huge amounts of medical data. [1] This data—including patient histories, MRI images, and billing records—has been used as Watson’s training data. Through machine learning, Watson has become incredibly precise in its predictions: a study by the University of North Carolina School of Medicine found that 99 per cent of the time, Watson made the same diagnoses and treatment recommendations for cancer patients as the best human experts. Researchers also found that in 30 per cent of cases, Watson identified optimal treatment options that doctors had overlooked. These recommendations were thanks to Watson’s ability to process a massive amount of data, including thousands of research papers that humans could not possibly be expected to consume and process.

Watson’s diagnostic abilities were put to the test in Japan in 2016. [2] At the time, a group of Japanese doctors were struggling to determine from which rare type of leukaemia a patient was suffering. They provided Watson with the patient’s genetic data, which was then compared to a database to identify genetic mutations unique to different types of leukaemia. Watson identified the patient’s illness as a rare secondary type of leukaemia caused by myelodysplastic syndrome, allowing doctors to adjust her treatment plan appropriately. Reviewing the patient’s genetic mutations and comparing them to other cases in a massive data set would have taken a doctor at least two weeks with no guarantee of finding a pattern match; it took Watson just ten minutes.

How does AI perform diagnostic decision-making?

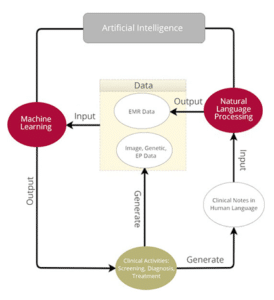

To perform a diagnostic assessment, these types of tools combine several AI abilities, including natural language processing and machine vision.

The figure to the right illustrates how data is fed through the machine-learning algorithm.

To make a diagnostic prediction, the system must evaluate the patient’s traits—including his or her age, gender, racial background, test results, and medical history. This may include diagnostic imaging (interpreted using machine vision technology), clinical symptoms, clinical notes (given structure using NLP), current medications, and so forth. By evaluating the case-specific data, comparing it with data collected in other cases and research around the world, AI applications assist doctors in critical diagnostic decision-making. With the help of AI, proper diagnoses can be made earlier, preventing the deterioration of a patient’s condition and putting them on the road to recovery more quickly.

To learn more about other AI tools that can engage with professionals and clients, click here.

To learn about how AI is being used to solve other complex problems, click here.

Engagement

Artificially intelligent engagement tools primarily use natural language processing’s (NLP) ability to interact with employees or customers. Engagement tools—chatbots or intelligent virtual agents (IVAs)—interact with humans through text or audio conversations. The first chatbots were designed to answer user questions by identifying keywords and speech patterns that could inform a response. Microsoft’s infamous Clippy was a classic chatbot assistant who did his best to direct users to their desired information but possessed no awareness of their goal and thus delivered a sub-optimal and much-maligned end user experience.

Intelligent virtual agents

Today, AI engagement tools are moving beyond the traditional basic abilities of chatbots to the more context-aware model of IVAs. These enhanced AI tools have an advanced understanding of language, allowing them to interpret user goals with very high accuracy. They do not simply understand words and grammatical logic and apply pre-established logic in response, they understand nuanced word combinations and context and thus meaning to perform much more complex tasks in response. Some can even recognize and respond to human emotions.

Many technology companies are scrambling for dominance in the virtual assistant field. Apple’s Siri, Google’s Assistant, Amazon’s Alexa, and Microsoft’s Cortana inhabit almost every personal mobile device and laptop in the world, as well as a good many kitchens and living rooms. However, the abilities of these tools to perform basic tasks, such as playing music or setting an alarm, represent only a fraction of the potential uses of IVAs.

IVAs in use

The healthcare industry has been introducing IVA applications to manage customer interactions outside of hospital settings. The WoeBot for example, converses daily with users, tracking their moods and providing support options when it detects an issue. Use of the app has been reported to reduce the symptoms of stress and anxiety. [1] Another IVA, GetAbby’s Care Coach [2], is being used by the Congestive Heart Failure Pilot Program to “instruct, educate, monitor, and remind” patients of proper post-operational healthcare. Patients can interact with a virtual avatar at any time of the day, helping them to manage their medications, appointments, diet, and exercise.

The retail industry is also experimenting with AI engagement. The AI customer service platform DigitalGenius, [3] for example, reviews customer service transcripts to identify successful interactions. This data is then used to automate basic customer service interactions and to suggest effective responses for employees managing more complicated service tasks.

The benefits of IVAs

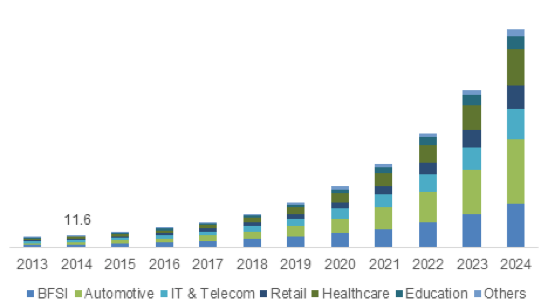

With the widespread applicability and potential benefits of engagement tools, the IVA global market is expected to grow to nearly $16 billion by 2024. [4] these engagement tools can perform many of the basic interaction functions of a human employee, but they do not require sleep or even a physical building in which to work. They are easily accessible (processing information as text, a digital message, and even voice or video) and always available: an IVA is never busy with another customer and never has to put anyone on hold. While human employees can reserve their efforts for more high-value customer engagements, IVAs can be scaled to provide basic support to a much larger audience.

As AI technology advances and models gain the experience necessary to improve themselves, IVAs will gain more human-level intuitiveness. Through experience, they will manifest a greater area of expertise than most human employees. This will enhance computer systems’ ability to anticipate and respond to clients’ needs while also adding to the knowledge base available to human employees. Intelligent virtual assistant technologies will undoubtedly continue to be adopted more broadly and establish themselves as invaluable tools across most industries.

Although not all business tasks require communication or engagement solutions, they can still benefit from other AI solutions. Robotic process automation (RPA), for instance, can also free up employees for high-value tasks, simultaneously saving a business both time and money. Learn more about robotic process automation.

[1] https://www.businessinsider.com/stanford-therapy-chatbot-app-depression-anxiety-woebot-2018-1

[2] https://www.russellresources.com.au/single-post/2016/03/03/GetAbby-arrives-in-Australia-the-only-Human-Avatar-Care-Coach-for-patients

[3] https://www.digitalgenius.com/

[4] https://www.grandviewresearch.com/press-release/global-intelligent-virtual-assistant-industry

[5] https://www.gminsights.com/industry-analysis/intelligent-virtual-assistant-iva-market

Process Automation

Basic robotic process automation (RPA) is the automated execution of basic processes based on a predetermined set of rules. It can improve business process quality, delivery, and costs effectiveness. However, if a process encounters a judgment-based decision, it must stop and wait for human intervention. This can result in bottlenecks and reduced process efficiency. When RPA software robots are combined with AI, their abilities are greatly enhanced, and limitations mitigated.

How does RPA work?

RPA software robots are tremendously flexible because they can interact with systems using both programming interphases and interfaces designed for human employee interaction. Unlike human employees, these robots can perform steps quickly and consistently for twenty-four hours a day. In any given sequence, RPA software robots can perform over 600 actions. This ability to execute long, complicated processes makes RPA a compelling replacement for human labour in many situations.

In 2017, NASA launched four separate RPA pilot projects [2] focused on automating processes in several departments. In the financial department, human employees work alongside RPA robots: every time a human approves a budget, an RPA software robot distributes the appropriate funds to each office and delivers a spreadsheet of audited balances to NASA headquarters. Another project uses RPA robots in the human resources department. These robots process service requests by reviewing emails, adjusting schedules, and assigning the appropriate staff member to the case. NASA is currently making plans for the agency-wide rollout of these RPA projects.

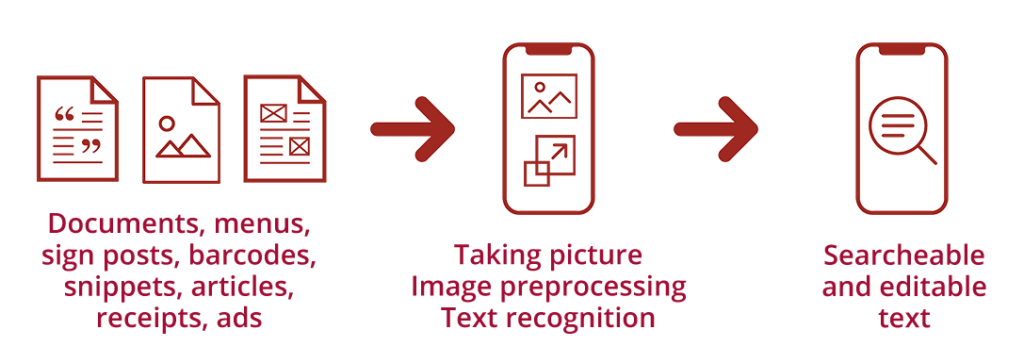

To complete the tasks required by the NASA project, RPA software robots had to be able to process any information a human employee would. Because of the complexity of the process, the system needed to demonstrate human-like judgment, and interpret language ambiguity, nuances, and context. There was also the added difficulty of translating information that was delivered in paper form. To address this, RPA software robots used machine vision (often combined with optical character recognition to better “read” a document’s content) and natural language processing (NLP) to understand a document’s meaning better (see figure below). Neural network capabilities can also be used to automate judgment and decision-making in many situations.

What are the benefits of AI-enhanced RPA?

Robotic process automation is an incredibly pragmatic and cost-effective means of improving productivity. However, traditional RPA struggles with the efficiency constraints of its context dependency and inability to make judgment-based decisions; AI-enhanced RPA can remove these process bottlenecks in many situations. AI-enhanced RPA’s extended skill set ensures that it can be applied to a much broader class of business processes.

Because RPA software robots mimic human actions using existing interfaces, companies do not have to make changes to their systems. The robots themselves are so easily programmed that minimal involvement from the IT department or expensive programmers is required. The entire system is scalable, as the simple programming makes it possible for multiple users to deploy additional robots to handle tasks as needed. This means that, in most cases, businesses see a positive ROI in just five to nine months.

Contact the Burnie Group today to find out how your business can benefit from RPA.

To learn more about launching your own pilot project, click here.

To learn more about how AI systems can handle client engagement tasks, click here.

[1] https://paperlessocr.com/robotic-process-automation-rpa-ocr/

[2] https://gcn.com/articles/2018/06/04/nasa-rpa.aspx

Volume Processing

The amount of data in the world is growing exponentially. It is estimated that by 2025, we will be producing 163 zettabytes annually: ten times the amount of data produced in 2017 alone. [1] While much of this growth is human-generated (mostly in the form of video), increasingly vast amounts of data are being generated by Internet of things network-connected sensors, machine vision sensors, and computer simulations.

This explosion of data presents multiple challenges, including storage limitations, system management, and a shortage of the skills required to integrate big data systems. Even if these challenges are addressed, human beings can simply not process and make sense of such large quantities of data. Artificial intelligence, on the other hand, is capable of processing very large volume of data and extracting insight. However, just as this AI ability is being considered as a tool for large-volume data processing, another potential technology with even greater processing power and data generation potential is emerging: quantum computing.

What is quantum computing?

Quantum computing is a radically new kind of computer architecture that, instead of using binary bits to perform calculations, uses something called qubits that can occupy many simultaneous states. The implications of this new approach for some types of calculations are staggering. Calculations that would take the biggest computers on Earth billions of years to calculate will be able to be done in just a few hours. The data generated from this kind of system will be staggering, enabling advanced modelling and simulations capable of identifying new drugs or interpreting the traffic patterns of every vehicle in a city. The potential to create value is vast but only advanced AI tools will be able to process all this data and make sense of it.

Quantum computers will be hugely influential for AI technologies, as they will both accelerate the training process of neural networks and increase the amount of data they can evaluate. The basic building blocks of quantum computing—mainly algorithms and processors—are still in development. As these, as well as quantum programming languages and cloud services, become available, developers will be quick to incorporate their power into software solutions. Hybrid systems, combining classical and quantum computing, will inevitably emerge. This current period of learning and development is critical to increasing business awareness so that once quantum potential is achieved, adoption will be quick to follow.

[1] https://www.forbes.com/sites/andrewcave/2017/04/13/what-will-we-do-when-the-worlds-data-hits-163-zettabytes-in-2025/

Sensing

Understanding the world around us allows us to interact with it. We derive this understanding by interpreting the input from our senses. Some AI technologies have been designed to mimic human senses, thus enabling real-time, real-world interactions. Neural networks mimic the human brain to process the sensory input. Swarm technology emulates the collective intelligence of groups of humans. Artificially intelligent engagement tools can impersonate human conversation and understand the conversational meaning.

To accomplish tasks requiring human sight, sensing applications rely on machine vision. Using a system of cameras and sensors, machines render an understanding of real-world objects and the environment. This technology has seen high adoption and advancements in the areas of facial recognition, vehicle autonomy, and the Internet of things. The acuity of these sensory AI systems can be significantly enhanced by generative adversarial networks (GANs), in which AI systems actually train one another to improve through direct competition.

Facial Recognition

The global facial recognition technology market is projected to generate $9.6 billion in revenue by 2022. [1] Using machine vision, cognitive sensing applications scan faces, dividing them into comparable data points. These data points are compared to the application’s training data to generate a prediction (e.g. the system is 97% sure the face it sees is that of a particular user). Some tools can even identify human emotions. While there are obvious privacy concerns, facial recognition has almost countless productive and valuable use cases. It is already being tested and implemented within the banking, healthcare, and retail industries.

Banking

MasterCard is leading the way in facial recognition for consumer banking. Its Identity Check Mobile app is currently available in 34 markets and will be launching in Europe in April 2019. [2] The app circumvents the need for traditional passwords, scanning users’ fingerprints or faces to verify identity for online payments. Over 93% of consumers say they prefer using biometrics—their fingerprint or face—to using a traditional password for financial services. In addition to ease of use for consumers, the technology also increases digital security and helps financial institutions fight fraud.

Healthcare

Electronic pain assessment tool (ePat) apps (developed by ePat Technologies [3]) are being used to provide care assistance both inside and outside of hospital settings. PainChek [4] is an ePat app being used to treat patients who have difficulty communicating, such as those with dementia. The app detects nuances in facial expressions associated with levels of pain. Users input additional data for non-facial pain cues, including vocalizations, movements, and behaviours. This data is aggregated to produce a “severity of pain” score. Illnesses such as dementia are often associated with communication difficulties, which can lead to erratic behaviour due to undiagnosed pain. These types of applications allow medical consultants to understand the root causes of severe behaviours and treat the associated pain more effectively.

Retail

The retail industry has been having a somewhat harder time finding acceptable uses of facial recognition technology. A retail app called Facedeals targeted customers by sending special offers from businesses to their phones. [6] Facial recognition cameras installed at the entrance of business allowed the app to recognize customers when they entered a store. The app reviewed customers’ “like” history on Facebook and pushed deals to their phones accordingly.

This use of facial recognition technology is fraught with ethical challenges. Although personalized marketing is proven to be persuasive, there is a point at which consumers find it to be unnerving and invasive. This was the case for Facedeals, which was forced to rebrand itself as Taonii. The app still offers tailored deals based on location but no longer uses facial recognition.

Sensing technologies can recognize more than just your face; they can recognize the entire world around you.

[1] https://www.alliedmarketresearch.com/press-release/facial-recognition-market.html

[2] https://newsroom.mastercard.com/eu/press-releases/mastercard-establishes-biometrics-as-the-new-normal-for-safer-online-shopping/

[3] https://www.epattechnologies.com/news/smart-app-identifies-pain/

[4] https://www.painchek.com/industry-news/smart-app-identifies-pain-the-senior/

[5] https://www.australianageingagenda.com.au/2017/10/20/national-aged-care-dementia-service-trial-facial-recognition-app/

[6] https://techcrunch.com/2012/08/10/facedeals-check-in-on-facebook-with-facial-recognition-creepy-or-awesome/

Autonomous Vehicles

The act of driving is a complicated task involving awareness, control, and continuous adjustment. The human brain must ingest and interpret a massive amount of data, comparing it to experience and knowledge of vehicle performance. Drivers must also consider the potential actions of other drivers in different conditions. Ability to safely operate a vehicle can be impeded by weather conditions, fatigue, distraction, or impairment; therefore, there is always a substantial chance of error.

A recent NHTSA study estimated that 94% of all car accidents are caused by human error. [1] What if all those accidents could be avoided simply by removing the human from the equation? What if a vehicle, featuring an AI computing system, could not only safely navigate the driving world but could learn to be a better driver through direct experience and remotely by ingesting the experiences of millions of other vehicles? AI-equipped autonomous vehicles have the potential for dramatic savings related to insurance, congestion, repairs, as well as human life. Vehicles with the cameras and sensors and onboard AI systems are already on the roads. They are set to increase in numbers and ability, undoubtedly transforming the transportation landscape.

How do autonomous vehicles work?

For several years now, AI engines have been leveraging GPS information, live traffic information, and historical patterns of other vehicles to find the optimal route to a destination. What is new is that AI-equipped autonomous vehicles have gained the ability to safely navigate the world using a combination of machine vision sensors—GPS, cameras, radar, LiDAR, and ultrasound—and deep learning AI capabilities, which can make decisions about accelerating, braking, and turning. These capabilities allow a car to “see” and react to the world.

The future of autonomous vehicles

With the development of more advanced AI capabilities, AI-enhanced driver assistance programs, like Tesla’s Autopilot, are moving toward fully autonomous systems. These systems will integrate even more IoT devices and smarter AI decision-making engines, trained using even larger datasets. These cars will not only be able to drive by themselves, but keep themselves updated, maintained, and well-trained. With some estimates saying autonomous vehicles could be commercially released within the next two years, car and trucking companies around the world are preparing for the transition from no or limited autonomous features to fully automated, intelligent vehicles.

Autonomous vehicles currently carry a high price tag due to the cost of multi-sensors, system maintenance, technical refinement, and insurance and liability issues. For this reason, autonomous vehicles will likely be used for business and public transport uses before they are commonplace in private driveways. Many car manufacturers and AI developers are teaming up with other companies to develop their own autonomous fleets: Rideshare companies Lyft and Uber have paired with Drive.ai and Volvo, respectively, to develop a self-driving branch of their rideshare services [3] [4]; Ford recently launched a project with Domino’s Pizza to test self-driving delivery vehicles [5]; and Toyota has plans to debut its “e-Palettes”—autonomous, multifunctional vehicles that will transport people and cargo—in 2020. [6] With the idea that no autonomous vehicle should sit idle if it has the potential to generate some type of value, Toyota projects multiple, adaptable uses, including shared vehicles, public transit, movable stores, cargo vehicles, and more.

This investment in commercial-use autonomous vehicles will undoubtedly influence the adoption of private vehicles. As technology improves and costs decrease, people may become more comfortable with the idea of handing over complete control to a machine. Users may also be tempted by significantly lower insurance premiums thanks to the greatly reduced risk of accident associated with autonomous driving.

[1] https://blog.lawinfo.com/2017/09/06/human-error-causes-94-percent-of-car-accidents/

[2] https://www.wattknowledge.com/blog/autonomous-cars-data

[3] https://www.businessinsider.com/lyft-driveai-partnership-2017-9

[6] https://www.theverge.com/2018/1/8/16863092/toyota-e-palette-self-driving-car-ev-ces-2018

The Internet of Things

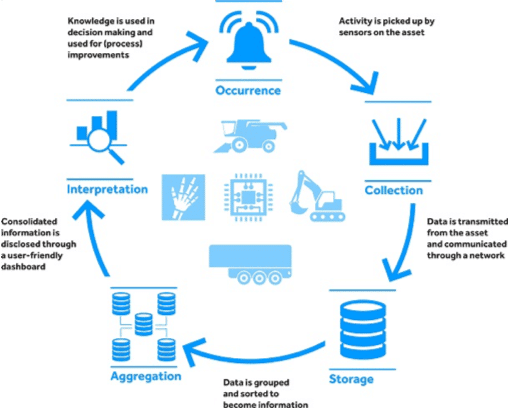

The Internet of things (IoT) is the great variety of network-attached sensors and actuators that are increasingly embedded into many aspects of our lives and businesses. IoT devices can deliver input to AI engines, allowing for both a visual and an analytical awareness. The following graphic illustrates how information collected by sensors is distributed across the IoT.

Connected home devices

By integrating data from different connected IoT devices, an AI-enabled smart home can share relevant information and insight with applications to address different needs. Google’s Nest thermostat, for instance, is a network-attached IoT sensor. The thermostat can be controlled wirelessly from a user’s phone. It can also leverage machine-learning algorithms, learning to recognize a user’s behaviour patterns and pre-emptively adjust temperatures accordingly. It can also adjust itself by using a user’s zip or postal code to incorporate current weather conditions into its decisions.

Connected vehicles

Many vehicles (particularly autonomous vehicles) also make use of large numbers of IoT devices. Sensors in a car can collect many data points from different aspects of the car’s performance and environment. If sensors detect an error, this information can be transmitted across the IoT network to different departments, contacting the nearest dealership, ordering replacement parts, and checking the driver’s insurance for coverage. An IoT-enabled vehicle (particularly an autonomous vehicle) not only saves drivers and manufacturers time and money but also provides a safer driving experience.

The future of connected devices

The potential for IoT devices is vast and growing. Millions of connected devices are being integrated into products every year. In 2016, 104 million wearable devices (such as Apple Watches) were shipped. [3] This number is projected to grow to 240.1 million devices by 2021. The number of connected home devices in the U.S. alone is projected to surpass 1 billion by 2023—totalling more than $90 billion in spending. These devices will generate huge amounts of data. The potential value of this IoT data is only now being realized using advanced analytics and other AI applications that can identify patterns in these large datasets.

However, the kinds of problems being solved using typical AI engines are relatively narrow owing to the task-specific nature of their design and training data set. A different approach is required to tackle more complex problems. This is the domain of a new area of AI that focuses on collective, or swarm, intelligence.

[1] https://www.dllgroup.com/au/en-au/solutions/internet-of-things

[2] https://www.businessinsider.com/iot-forecast-book-2018-7

Complex Problem Solving

Artificial intelligence technologies are very good at performing specific tasks. Machine vision can identify different faces in an image; natural language processing (NLP) can be used to read through thousands of pages of contracts to identify inconsistencies. Businesses can use AI-enhanced robotic process automation (RPA) to execute decision-oriented process tasks with increased speed and efficiency. These abilities save businesses both time and money and dramatically improve customer experiences. However, in the real world, problems are often far more complex. For instance, how can an AI system be trained to optimize urban traffic patterns with all its many variables? Is it possible for a machine to make large-scale investment decisions or analyse global conflicts?

One way to address the world’s more complicated problems is to mimic the world’s own problem-solving techniques. Bio-inspired AI is a field of robotics that designs intelligent robotic systems based on natural processes. [1] Many of these systems focus on swarm intelligence: the collective behaviour of decentralized organisms that allows them to complete complex tasks without specific direction. A single ant, like a single computing system, can perform a limited range of basic tasks. But an ant colony can build cities, wage war, farm, and form superhighways for food delivery. Similarly, swarms of robots can be trained to build and navigate complex patterns, autonomously troubleshooting without directly communicating with each other (see China’s 2017 drone launch [2]). However, the applications of swarm intelligence for complex problem solving go far beyond the realm of AI robotics.

Swarm intelligence

Wisdom-of-the-crowd applications and prediction markets have demonstrated the potential of swarm intelligence for many years. The AI company Unanimous AI was one of the first to combine human crowd intelligence with AI. Unanimous AI’s platform connects people from around the world and collects their opinions on topics. Artificial intelligence-enhanced analytics analyze the collected data, evaluate how contributors arrived at their decisions and make predictions accordingly. This technology allowed Unanimous AI to predict the Kentucky Derby 2016 superfecta: the order of the first four horses to cross the finish line. As the superfecta is infamously difficult to predict, those who placed a $20 bet on Unanimous AI’s predictions walked away with $11,000. [3]

Unanimous AI’s predictions demonstrate how a collective human intelligence can work with an AI system to generate valuable and accurate predictions. However, as AI continues to develop, it continues to prove itself to be more efficient and accurate than humans at performing specific and specialized tasks. It stands to reason that a collective machine intelligence may be superior to a collective human one for some problem domains.

Machine swarm intelligence

An early example of collective machine intelligence is SingularityNET, the world’s first decentralized AI network. The network is a marketplace where a swarm of AI engines, each optimized and trained for different tasks, can work together to solve complex problems. [4] SingularityNET connects human contributors from around the world. These contributors can exchange their AI tools and solutions for tokens, resources, and services.

Each new AI tool becomes an “agent” of the platform. These agents can receive requests from users to perform specific tasks. If an agent encounters a task it is not trained to do (perhaps the agent is excellent at transcribing video but does not have the NLP skills to create a summary of the text), it forwards that task to another agent that has that specialized skill. Recommendations of which agents are best at completing certain tasks are rewarded using a blockchain-based reputation token system. In this way, the entire network of AI engines is able to grow and self-organize, working together to solve problems that individual AI engines could not solve.

SingularityNET is a relatively new company (the token system has only been available since January 2018), but this form of collective AI intelligence may very well be the future of AI. It is far simpler and more cost effective to form a network of specialized AIs than design a single generalized intelligence. It is also an actionable solution for businesses that require a more flexible AI solution than a single AI engine can offer. These adaptive swarms of AI systems working together may make tackling complex problems an affordable reality in the not too distant future.

[1] https://www.omicsonline.org/directions-for-bio-inspired-artificial-intelligence-jcsb.1000e102.php?aid=8959

[2] http://www.ehang.com/news/365.html

[3] https://wwwrdinglyforbes.com/sites/alexkay/2017/05/03/kentucky-derby-partnering-with-a-i-company-that-correctly-predicted-last-years-superfecta/#670b3f232aba

[4] https://hackernoon.com/a-better-insight-of-singularitynet-607fd64e18c4

Find out what artificial intelligence can do for your organization.

Connect with us