Welcome to your AI and Machine Learning Knowledge Hub at Burnie Group

What is Artificial Intelligence?

Artificial intelligence (AI) is the theory and area of computer science that develops systems designed to perform tasks that normally require human intelligence. The concept of AI has existed for decades; Alan Turing first posed the question, “can machines think?” in his 1950 paper, “Computing Machinery and Intelligence.” However, the technology can still be considered to be in its early stages, and we are only now coming to terms with its massive potential.

Artificial intelligence is already being used to improve processes and solve complex problems that could previously only be addressed by humans. AI technologies such as Machine learning, deep learning, neural networks, and generative adversarial networks, have significantly advanced in recent years and are being used to accomplish complex tasks that were never before possible. With AI, machines are gaining the ability to understand language (natural language processing), see (machine vision), and make informed decisions (cognitive computing). These abilities are being applied across industries to perform tasks and solve problems.

To learn more about AI’s abilities, click here.

To learn more about AI applications, click here.

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

| Machine Learning |

Artificial Neural Networks |

Deep Learning |

Generative Adversarial Networks |

Machine Vision |

Natural Language Processing |

Cognitive Computing |

To learn how your business can benefit from AI, reach out to Burnie Group today.

CONNECT WITH USWhat is Machine Learning?

Machine learning is the area of AI that allows computer systems to consume large data sets, discover patterns, and learn to perform specific tasks without being explicitly programmed to perform those tasks.

There are two types of machine learning: supervised and unsupervised. In supervised machine learning, a model is provided with a labelled data set. In unsupervised machine learning, a model uses algorithms to draw conclusions based on unlabelled data. The model creates outcomes by analyzing patterns it identifies in the unlabeled data set. This is called clustering.

There are many different types of machine learning models, each with their own set of algorithms. These models include support vector machines—used for non-probabilistic categorization of items into categories, such as identifying parts of an image as a face or non-face—and Bayesian networks, which are used to discover probabilistic relationships between items and categories, such as symptoms and diseases. Two of the most promising machine learning approaches currently being explored are neural networks and deep learning.

It is important to remember that the data provided to any machine learning model must be prepared correctly. The training data, for instance, cannot be the same data the model uses to perform the desired task. Much like a math teacher testing her students using a copy of their homework, identical training data would teach the model—like the student— to memorize the correct answers instead of developing a strategy for reaching the answer on its own. The quality, quantity, and preparation of data determine the predictive quality of results.

Interested in optimizing your data for machine learning systems?

CONNECT WITH USWhat are Artificial Neural Networks?

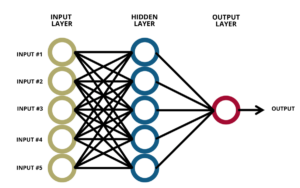

Artificial neural networks are a specific model of machine learning that mimics human learning patterns. These networks are made up of units (neurons) that are grouped in layers. The more layers there are, the more complicated problems the network can work through. This neural system for processing information is what powers complicated machine learning processes, like deep learning.

In the human brain, neurons receive multiple inputs from the body’s senses, notifying them of sights, sounds, and touch sensations. The neurons process these inputs to determine if the information will be passed along, ultimately prompting the brain to instruct action.

The weight the neuron gives to an input changes with experience. As in Pavlov’s famous experiment, a bell might not qualify as an important input for a dog’s brain. However, if experience teaches the dog that the bell is associated with food, the dog’s neurons begin to recognize patterns. The information should prompt a salivating response.

To process information, each neuron starts with a base value that allows it to make a guess as to what input will result in what outcome. This guess may not be correct the first time, so the neuron adjusts the weight of this value. As the system processes more information, the neurons adjust their weights and learn to produce more accurate outcomes.

As more layers of neurons are introduced, the system becomes capable of increasingly complex abstraction and problem-solving. Deep learning systems use neural networks with numerous layers to accomplish tasks never before achievable by machines. These inner layers are hidden from their users, who can see only the original data and the outer layer of results. This is why artificial neural networks are considered black-box algorithms: the logic of the machine in producing an answer remains unclear.

Interested in learning how your business can benefit from AI?

CONNECT WITH USWhat is Deep Learning?

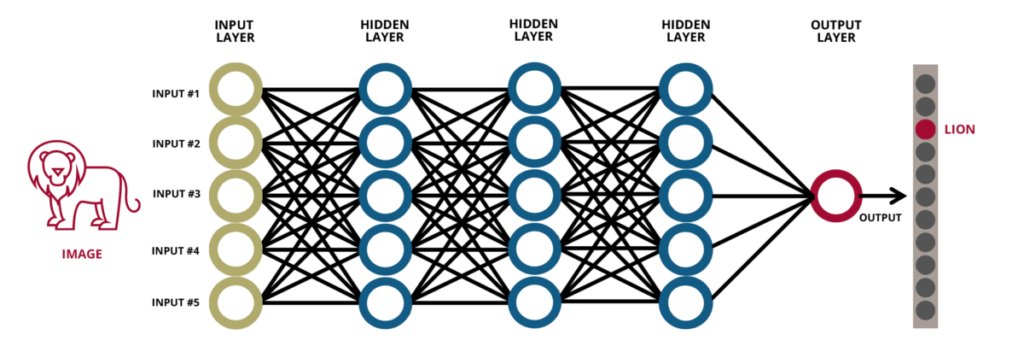

Deep learning is a type of machine learning that uses artificial neural networks to process unstructured data sets and generate intelligent decisions. A deep learning system typically contains large systems of artificial neurons arranged in several hidden layers.

Deep learning is an unsupervised form of machine learning, meaning it interprets unlabelled data, allowing the algorithm itself to derive meaningful features from it. This feature extraction allows the system to learn how to accomplish a specific task using a set of features while also learning the features themselves. Simply put, a deep learning system can learn how to learn better.

Consider the image below of a deep learning system using a neural network to identify an image. The original data is an image of a lion. In a traditional supervised model, a programmer or data scientist would have labelled the features of the lion for the system: fur, yellow, big, eyes, outside. In an unsupervised deep learning model, the features are not labelled; the system must identify them itself. At each level, artificial neurons make an educated guess about the image, transferring information to the next layer. These guesses may not be correct, but with more data and experience, the system learns how to increase its accuracy. After the data has been processed by all the layers, and the system has identified the data’s features, a classification is produced: lion.

Image recognition is a major use case for deep learning. Many consider the 2012 ImageNet Large-Scale Visual Recognition Challenge, in which a deep learning algorithm called Supervision from the University of Toronto swept away the competition, as the spark that unleashed the significant investments in AI that are driving us quickly towards an AI pervasive future.

The ability of deep learning systems to evaluate unstructured data sets holds vast possibilities. Large companies are heavily investing in this potential. Google is using deep learning to reduce the error rate of its speech recognition software [2] and Microsoft has designed a deep learning system that can translate Chinese news articles into English with the same accuracy as a human. [3] Deep learning is also being used for machine vision, powering autonomous driving systems from companies like Tesla and interpreting radiology images for doctors. Deep learning technology is also fostering breakthroughs, in generative adversarial networks (GANs), in which machine learning systems teach each other, and natural language processing (NLP), which is allowing computers to understand and interact with humans.

[2] https://www.technologyreview.com/s/513696/deep-learning/

Learn more about how AI can be applied in your business.

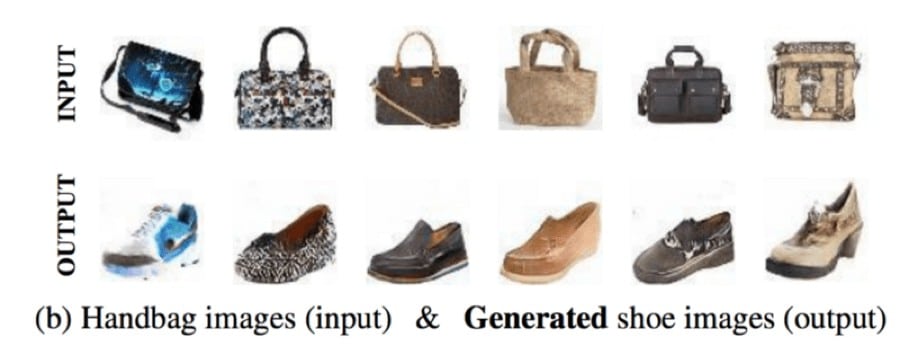

CONNECT WITH USGenerative adversarial networks (GANs) are a class of AI algorithms used in unsupervised machine learning models. These networks are made up of two different systems: one generative, one discriminative. These systems are trained using known data sets. The goal of the generative system is to “fool” the discriminative system by producing synthesized candidates that appear to have come from the true data set.

The discriminative system reviews the data until it can accurately discriminate between instances from the true data distribution and false candidates.

As with all machine learning models, the key to success is trial and error. The task of the generative system might be to produce an image based on the text. The figure below shows textual input describing a sunflower. The system’s first attempt at producing an image based on this text is clearly not a sunflower. The discriminative system can easily identify this candidate as an impostor amongst its dataset filled with proper sunflowers. This process is repeated—the discriminative system becoming better at flagging synthetic images, and the generative system learning how to better deceive it. Finally, the generative system is successful in fooling the discriminative system. A photo-realistic sunflower is produced purely by computer intelligence.

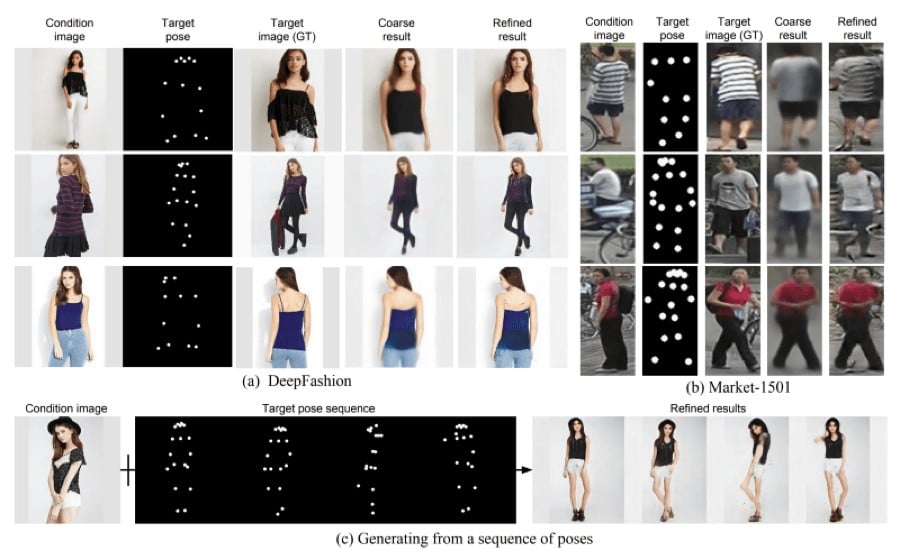

Generative adversarial networks (GANs) are becoming known for their uncanny ability to produce photo-realistic images and even video. There are numerous potential applications in the e-commerce economy alone. One complex problem being looked at is pose generation. Specially trained GANs are able to learn the nuances of human poses from training sets and then produce lifelike images based on imputed data and past experience. The figure below demonstrates how a GAN can extract a target pose from a target image and apply that knowledge to a new conditional image. The result is a new image, generated to show the original model in a new pose.

Large companies, like Facebook, [5] are currently funding AI thinktanks that are exploring the potential applications of GANs. Many applications of GANs extend beyond their remarkable imaging ability. Given a particular data training set, GANs can compose original songs in specific genres. There is also the potential that they could be used to help make medical diagnoses based on a patient’s symptoms, history, and pathology. Using a patient’s symptoms, history, and any images of her ailment, a GAN could generate possible causes and evaluate them against relevant data. The system would, in time, teach itself to make more and more accurate diagnoses.

As with most other AI projects, GANs require preparation of a specialized data set to achieve its goals. Training data must be presented in such a way that a GAN can learn to identify elements before it can accurately replicate them. Similarly, if the discriminative system does not receive appropriate data, it cannot identify the GAN-generated candidates, thereby removing the generative system’s chance to improve. If you are considering implementing a GAN, or any other AI system to help your business achieve its goals, be sure you chose the right data to teach them. Contact The Burnie Group today to learn how we can help.

[1] Ma, Liqian, Xu Jia, Qianru Sun, Bernt Schiele, Tinne Tuytelaars, and Luc Van Gool. “Pose guided person image generation.” In Advances in Neural Information Processing Systems, pp. 406-416. 2017.

[2] https://github.com/carpedm20/DiscoGAN-pytorch

[3] Sajjadi, Mehdi SM, Bernhard Schölkopf, and Michael Hirsch. “Enhancenet: Single image super-resolution through automated texture synthesis.” In Computer Vision (ICCV), 2017 IEEE International Conference on, pp. 4501-4510. IEEE, 2017. https://ieeexplore.ieee.org/abstract/document/8237743/

[4] https://www.techrepublic.com/article/how-generative-adversarial-networks-gans-make-ai-systems-smarter/

[5] https://www.scientificamerican.com/article/when-will-computers-have-common-sense-ask-facebook/

Interested in learning more about AI's applications in your business?

CONNECT WITH USWhat is Machine Vision?

Machine vision is the ability of a computer system to identify and process images in the same way a human would. Like a human, the goal of machine vision is for a computer system to not only “see” images, but to “understand” them. To do this, sensors (usually cameras) work in tandem with AI software algorithms, such as neural networks and deep learning systems.

Unlike traditional image processing, where the output is another image, the goal of machine vision is to extract specific information from the image and infer a meaning. Think of your social media account: you upload a photo and the system magically suggests people to tag in it. What is really going on is that machine vision extracts the features of each person in the photo and evaluates the likelihood that those features match one of your friends. With each photo processed, the system gets better and better at matching names to faces.

Currently, machine vision is primarily used in industry to improve automatic inspection and robotic process guidance. Bottling facilities, for instance, use a machine vision system for quality control. When bottles reach an inspection sensor, cameras are triggered, sending an image of the bottle to the machine vision system. The system interprets the unstructured information of the image and produces the required answer: yes, this is a properly filled bottle, or no, this bottle should be rejected. Historically, this kind of inspection has been done by hand. Now, the machine judges each bottle quickly and accurately, while an employee reviews the machine’s processes and can check each rejected bottle, just to be sure.

Machine vision is highly applicable to many industries, lowering production costs and increasing product quality. It will be also fundamental in transforming transportation as it plays a central role in autonomous vehicles. The ability of machine vision to extract information from images and understand it is proving invaluable in a great number and variety of use cases.

Want to apply machine vision in your business?

CONNECT WITH USWhat is Natural Language Processing?

Natural language processing (NLP) is the ability of a computer system to understand, interpret, and generate written and spoken language. Human communication is incredibly complex, has many nuances, and is often confusing, as people use colloquialisms, innuendoes, and frequent misspellings. Using NLP, AI-enabled systems, such as IBM’s Watson, have developed the ability to recognize and respond to such nuances and ambiguity.

Machine learning models can be taught to recognize language rules and patterns to provide some understanding of a text’s semantic (word meaning), syntax (sentence structure), and contextual information. However, traditional rules-based approaches are time-consuming and not as proficient at addressing issues such as misspellings. Natural language processing systems using deep learning algorithms have evolved so they are constantly learning new forms of languages, enabling systems to solve complicated tasks quickly and efficiently. Google’s web search, for instance, can return searches in a matter of seconds. In this brief time, the system uses NLP to evaluate the linguistic elements of the query and cross-reference this information with its knowledge of the user. For instance, to execute a simple search for “java,” the system will have to understand the search itself and determine—based on a linguistic understanding of the user’s history—if the user should be shown a list of local coffee shops or websites for software downloads.

Basic NLP tasks include parsing, stemming, tagging, language detection, and identification of semantic relationships. By taking in text- or voice-based data, NLP deep learning algorithms identify and interpret linguistic features in order to perform the requested task. Some common voice-based systems, like digital assistants Alexa or Google Assistant, first translate spoken commands into text. In the space of a few seconds, a system listens to what has been said, breaks it down into ten to twenty-millisecond clips, identifies units of speech, and compare these with its known samples of pre-recorded speech. Using semantic analysis, the system can then identify an appropriate answer. These systems are known as Intelligent virtual agents (IVAs) and have many uses in industries such as healthcare and telecommunications.

More complicated NLP tasks include categorization, summarization, translation, and extraction. In content categorization, a system reviews textual content, indexes it, detects duplication, and alerts the user to specific content. The ability to accurately recognize patterns, nuances, and context, these systems can also summarize large bodies of text and translate text or speech into different languages. The most complicated level yet involves teaching a NLP computer system to identify sentiment and extract opinions from text, a task which might even be difficult for native speakers.

Find out more about how natural language processing can be applied in your business.

CONNECT WITH USWhat is Cognitive Computing?

Cognitive computing is the combination of a suite of AI tools that can simulate the workings of the human brain. These systems don’t just see (like a machine vision system) or listen (using natural language processing) but can perform many functions including complex deep question and answer (Q&A). These systems specialize in processing and analyzing large, unstructured datasets, producing high-level decisions based on more conceptual information than basic data.

Cognitive computing systems are a complex architecture of subsystems, each using deep learning algorithms built on neural networks to process data and evaluate it against training sets. IBM’s Watson, for example, is being trained to improve medical diagnostics. The goal is for such systems to be able to process patient’s verbal, textual, and visual symptoms; compare them to a vast database of possible ailments and published studies; and present a summary of findings, likely diagnosis, and recommend possible courses of treatment for the review of a doctor.

A cognitive computing system can express high-level AI features, including adaptability, interactiveness, and contextual and iterative understanding. An adaptive system can learn as information changes and goals evolve. The interactiveness of a system allows users to comfortably interact with it. The system, itself, can also easily interact with other devices and cloud services. Using NLP, a cognitive system can achieve a high-level of contextual understanding. The system can draw on multiple sources of both structured and unstructured information to identify and extract the contextual elements necessary to complete a task. A cognitive system may remember previous iterations of a task, locating suitable information to better complete its current task. It can also ask questions or seek additional sources of information if the data provided is incomplete or unsuitable.

Cognitive computing systems are in the early stages of development and there is still a long way to go before the potential of such systems is realized; however, the individual AI elements that make up cognitive computing systems are in common use and continue to be improved and utilized across industries with a fair number of high-function cognitive systems already being used productively.

Discover how AI can benefit your business.

CONTACT US